In today’s connected world, disinformation has become one of the most powerful weapons in modern warfare. It spreads faster than bullets and undermines its targets from within—without a single shot fired. More dangerous than mere falsehoods, disinformation consists of deliberately constructed narratives designed to manipulate perception, erode trust, and achieve strategic objectives by distorting reality itself.

Disinformation differs from traditional propaganda. Propaganda often amplifies one-sided truth to boost morale or support. In contrast, modern disinformation aims to confuse, divide, and destabilize. It targets civilian populations, military leadership, and political institutions. Moreover, it exploits the open nature of the digital information environment—polluting news cycles, hijacking public discourse, and making truth increasingly difficult to verify.

The Digital Evolution of Disinformation

From Ancient Trickery to Algorithmic Warfare

Disinformation is not new. Military deception has shaped campaigns for millennia—from Sun Tzu’s strategies to World War II’s Operation Fortitude. In that Allied campaign, fake radio chatter, inflatable tanks, and double agents successfully fooled Nazi Germany about the location of the D-Day landings.

During the Cold War, the Soviet Union institutionalized disinformation under “dezinformatsiya,” planting false stories in Western media to steer policy debates. However, these operations required agents, resources, and time.

Today, technology has removed the barriers to entry. A single operator with access to social media, bot networks, and AI tools can now unleash chaos across borders. As a result, what once required months of planning now unfolds in minutes with global reach. The democratization of influence has made truth a casualty of speed.

Core Tactics of Modern Disinformation Campaigns

Disinformation operations rely on repeatable, scalable tactics that exploit human psychology, platform design, and social fault lines. Consequently, actors using these techniques can impact large populations with minimal resources.

Fake News Websites and Engineered Narratives

Fake news remains one of the most visible tools in the disinformation arsenal. These fabricated stories mimic credible journalism, using cloned websites or fictitious outlets to spread lies. They often include emotionally charged headlines to prompt shares without verification.

For example, during the COVID-19 pandemic, false claims about the virus’s origins, vaccine microchips, and government conspiracies flooded the internet. Investigations later linked these campaigns to state-sponsored actors in Russia, China, and Iran, each seeking to sow distrust in Western institutions.

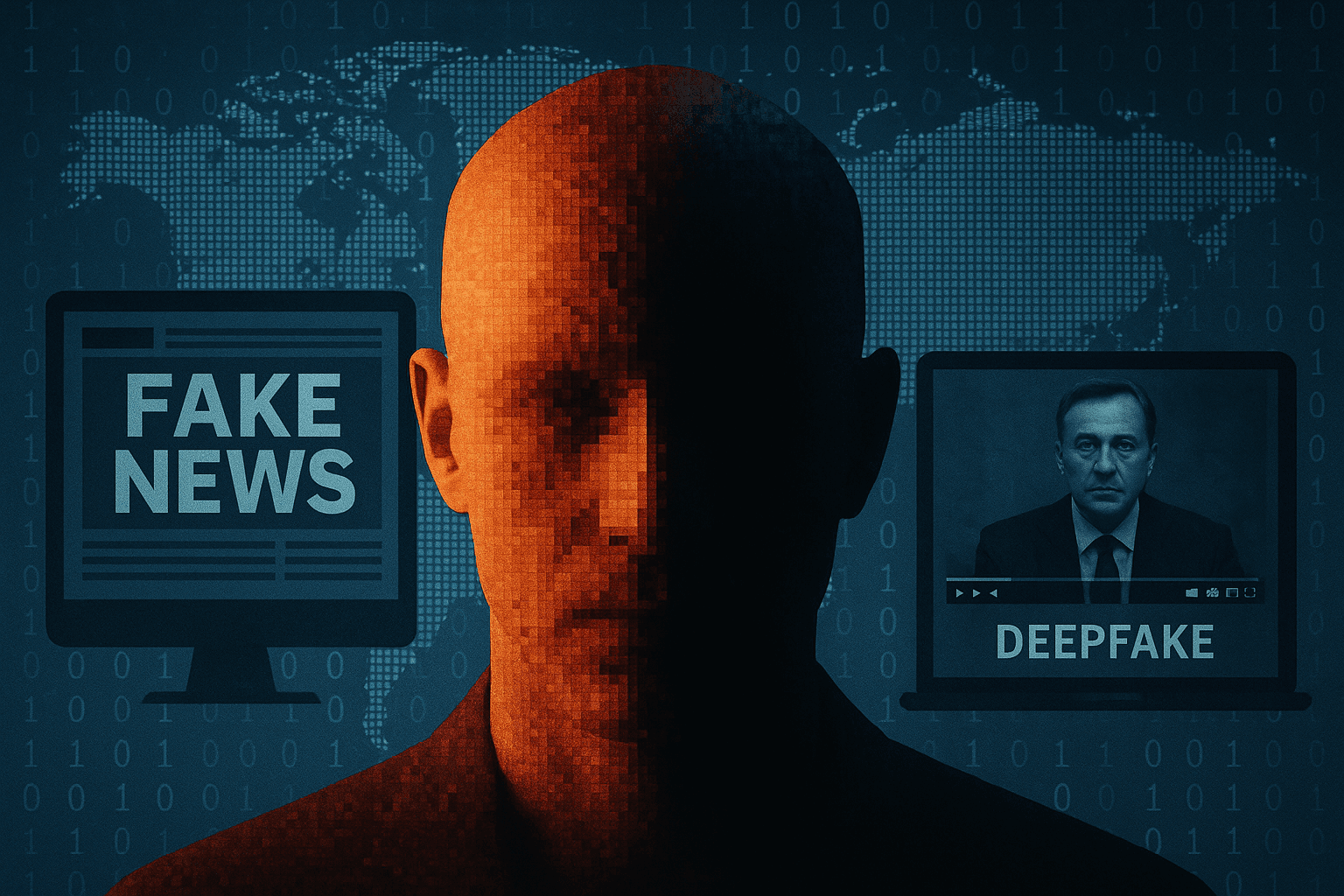

Deepfakes: Trust in the Crosshairs

Deepfake technology—AI-generated fake videos or audio—represents a dangerous leap forward in deception. In March 2022, a deepfake of Ukrainian President Zelensky appeared online, falsely urging Ukrainian troops to surrender. Although quickly debunked, the video highlighted how believable fabrications could trigger confusion or even battlefield paralysis.

As deepfakes grow more convincing, their strategic uses multiply. A single false video could undermine alliances, disrupt elections, or prompt unintended escalation in a crisis. Therefore, the stakes are rising fast.

Social Media Manipulation and Bot Networks

Social platforms remain the primary battleground for disinformation campaigns. State-backed troll farms and bot armies amplify polarizing content, distort trending topics, and simulate grassroots support for divisive causes.

A well-documented case is Russia’s Internet Research Agency, which ran thousands of fake accounts to influence the 2016 U.S. presidential election. These profiles—posing as real Americans—stoked tensions around race, immigration, gun rights, and religion. As a result, they deepened divisions and reduced voter confidence.

Meanwhile, the design of social media platforms, built to reward engagement rather than verification, continues to serve as a delivery system for psychological warfare.

Cyber Leaks with a Disinformation Twist

Cyber influence operations combine hacking with narrative warfare. Bad actors infiltrate organizations, steal sensitive data, and selectively release it—often doctored—to shift public opinion or destabilize political opponents.

In 2016, Russian operatives hacked the Democratic National Committee and leaked the emails via WikiLeaks, influencing U.S. voter perceptions. Similarly, in 2023, Iranian hackers targeted Israeli and American agencies, releasing altered documents to weaken trust between allies. By blending real facts with falsehoods, these operations become harder to disprove and easier to believe.

Case Studies: Disinformation in Live Conflicts

Russia’s Disinformation Offensive in Ukraine

Since 2014, Russia has used hybrid warfare—a blend of conventional force and digital disinformation—to justify aggression and suppress opposition. Kremlin-backed outlets like RT and Sputnik broadcast conspiracy theories, such as claims that Ukraine is controlled by neo-Nazis or that NATO provoked the war.

Russia also spreads falsified casualty reports, doctored images, and misleading battlefield footage. These tactics aim to confuse international observers, paralyze diplomacy, and maintain domestic support. Notably, the Kremlin tailors narratives to domestic and global audiences simultaneously.

China’s Narrative Control in Taiwan

In the lead-up to Taiwan’s 2024 election, China used coordinated campaigns to influence voters. Disinformation outlets claimed the U.S. planned to deploy nuclear weapons on the island. Others alleged that pro-independence candidates would provoke war.

These messages spread via Chinese-language platforms, fake local news sites, and paid influencers. Consequently, the goal was to erode trust in democratic institutions and discourage support for sovereignty.

Iran’s Digital War Against Israel

Iran has waged multi-channel disinformation campaigns targeting Israel, blending cyberattacks with false media narratives. In 2023, Iranian actors created fake Israeli news outlets, publishing fabricated reports about political instability and military failures.

These campaigns work to undermine morale, erode public trust, and pressure Israeli leaders to overreact or lose credibility. In many cases, the goal is not to persuade, but to destabilize and exhaust.

The Strategic Impact of Disinformation

Disinformation is not just about lying. It reshapes the operational environment, degrading the ability to act with confidence. Its effects ripple far beyond the information domain:

- Erodes trust: Constant exposure to false narratives corrodes faith in institutions, journalism, and leadership.

- Destabilizes societies: Polarized populations are easier to manipulate and harder to govern.

- Disrupts military operations: False orders, spoofed intelligence, or panic-inducing deepfakes can paralyze decision-making.

- Undermines diplomacy: In an environment of engineered uncertainty, allies hesitate, and adversaries exploit hesitation.

Therefore, combating disinformation is not just a communications issue—it is a national security priority.

Countering Disinformation

Winning the information war requires more than fact-checking. It demands a proactive, coordinated strategy across multiple fronts.

Public Education and Media Literacy

Governments and schools must train citizens to recognize disinformation tactics. Teaching digital literacy—how to verify sources, detect bias, and question emotional headlines—reduces the power of viral lies.

For instance, Finland has integrated media literacy into its national curriculum and is widely regarded as one of the most disinformation-resilient countries in the world.

Artificial Intelligence for Detection

Tech companies are building AI tools to identify deepfakes, bot activity, and coordinated inauthentic behavior. These tools can scan massive datasets to flag suspicious patterns before they go viral.

That said, this is a cat-and-mouse game. Adversaries are also using AI to generate more convincing content. As a result, detection efforts must evolve constantly.

Platform Accountability

Social media companies must enforce stricter rules against disinformation networks. This includes removing fake accounts, labeling manipulated media, and sharing data with independent researchers.

In some cases, public pressure or regulation may be required to overcome commercial hesitation.

International Collaboration

Disinformation campaigns are often transnational. Democracies must coordinate intelligence sharing, joint investigations, and unified messaging to expose foreign influence efforts. Organizations like NATO and the EU have already begun developing frameworks to counter cross-border information warfare.

Conclusion: Fighting the War of Perception

Disinformation is a battlefield, and every society is a front line. It is cheap, scalable, and devastatingly effective. It targets the most vulnerable point in modern democracies: the human mind.

As adversaries refine their disinformation tactics, defending against them becomes a strategic necessity, not just a journalistic concern. The future of warfare will not be won solely by bullets and bombs—but by who controls the narrative.

To resist disinformation is to safeguard sovereignty. The fight against weaponized lies is not optional—it is the defining information conflict of our time.